Compare commits

21 Commits

v3.2.0

...

152-altern

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

fb2642de2c | ||

| ffc7f29688 | |||

| 2c30bff45d | |||

| dc7a0ae6b7 | |||

| d3cf53c609 | |||

|

|

0555277c50 | ||

|

|

aa4b168c44 | ||

|

|

650f1cad92 | ||

|

|

8eebd424c8 | ||

|

|

a1a3aaca18 | ||

|

|

d779830df6 | ||

| 4375cd3ebc | |||

| b0c7c13e5e | |||

|

|

bb4db5d342 | ||

|

|

64df0e0b32 | ||

|

|

72c9634832 | ||

| a4cfc53581 | |||

| d4feefd639 | |||

| 434efc79d8 | |||

| 54771b2d78 | |||

| fceb36c723 |

1

.gitattributes

vendored

1

.gitattributes

vendored

@@ -1 +1,2 @@

|

|||||||

*.tsx linguist-detectable=false

|

*.tsx linguist-detectable=false

|

||||||

|

*.html linguist-detectable=false

|

||||||

|

|||||||

4

.gitignore

vendored

4

.gitignore

vendored

@@ -1,3 +1,7 @@

|

|||||||

|

.pre-commit-config.yaml

|

||||||

|

.direnv/

|

||||||

|

result/

|

||||||

|

result

|

||||||

dist

|

dist

|

||||||

.pnpm-debug.log

|

.pnpm-debug.log

|

||||||

node_modules

|

node_modules

|

||||||

|

|||||||

79

README.md

79

README.md

@@ -3,12 +3,6 @@

|

|||||||

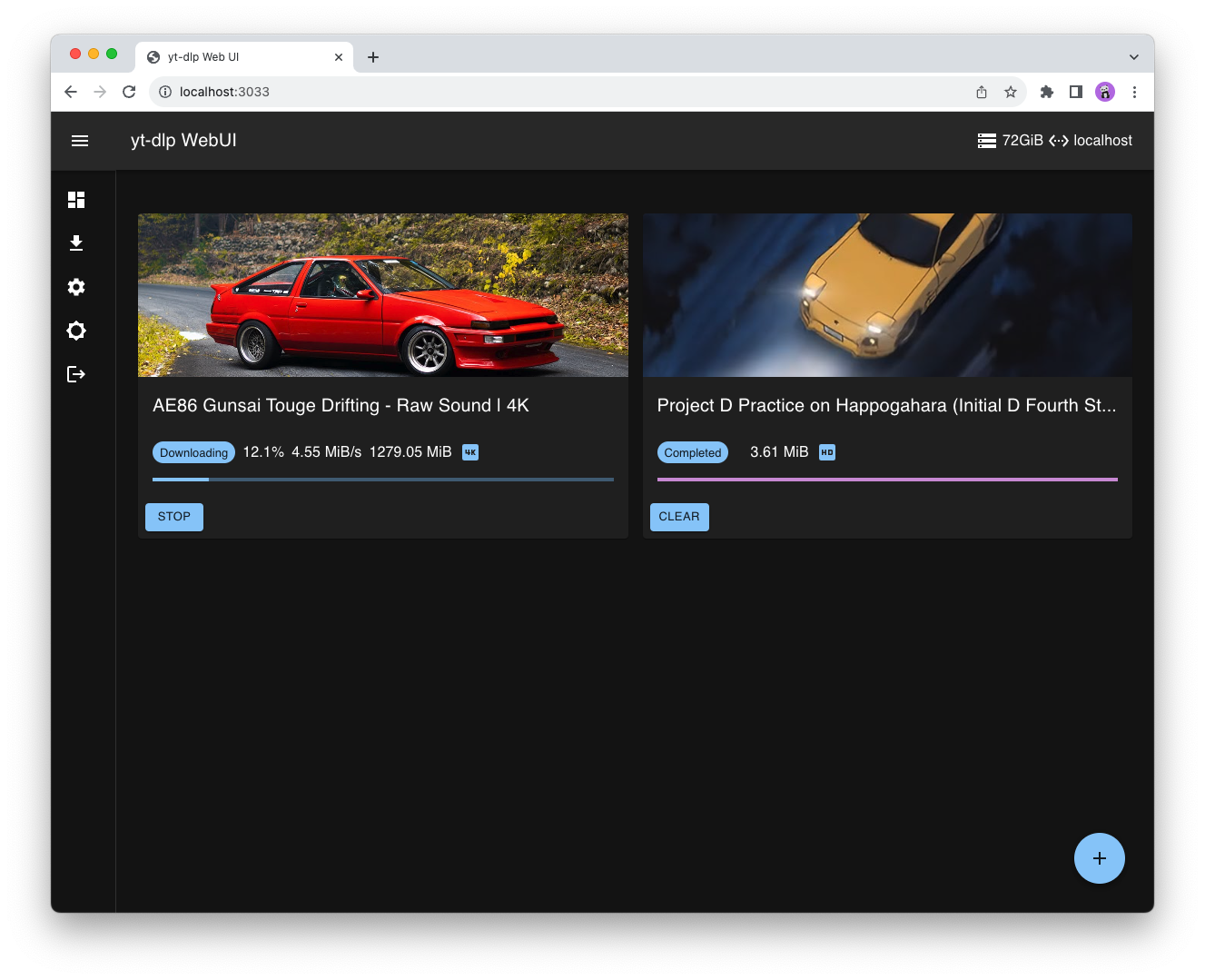

A not so terrible web ui for yt-dlp.

|

A not so terrible web ui for yt-dlp.

|

||||||

Created for the only purpose of *fetching* videos from my server/nas.

|

Created for the only purpose of *fetching* videos from my server/nas.

|

||||||

|

|

||||||

Intended to be used with docker and in standalone mode. 😎👍

|

|

||||||

|

|

||||||

Developed to be as lightweight as possible (because my server is basically an intel atom sbc).

|

|

||||||

|

|

||||||

The bottleneck remains yt-dlp startup time.

|

|

||||||

|

|

||||||

**Docker images are available on [Docker Hub](https://hub.docker.com/r/marcobaobao/yt-dlp-webui) or [ghcr.io](https://github.com/marcopeocchi/yt-dlp-web-ui/pkgs/container/yt-dlp-web-ui)**.

|

**Docker images are available on [Docker Hub](https://hub.docker.com/r/marcobaobao/yt-dlp-webui) or [ghcr.io](https://github.com/marcopeocchi/yt-dlp-web-ui/pkgs/container/yt-dlp-web-ui)**.

|

||||||

|

|

||||||

```sh

|

```sh

|

||||||

@@ -19,45 +13,9 @@ docker pull marcobaobao/yt-dlp-webui

|

|||||||

docker pull ghcr.io/marcopeocchi/yt-dlp-web-ui:latest

|

docker pull ghcr.io/marcopeocchi/yt-dlp-web-ui:latest

|

||||||

```

|

```

|

||||||

|

|

||||||

|

## Video showcase

|

||||||

[app.webm](https://github.com/marcopeocchi/yt-dlp-web-ui/assets/35533749/91545bc4-233d-4dde-8504-27422cb26964)

|

[app.webm](https://github.com/marcopeocchi/yt-dlp-web-ui/assets/35533749/91545bc4-233d-4dde-8504-27422cb26964)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Integrated File browser

|

|

||||||

Stream or download your content, easily.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Changelog

|

|

||||||

```

|

|

||||||

05/03/22: Korean translation by kimpig

|

|

||||||

|

|

||||||

03/03/22: cut-down image size by switching to Alpine linux based container

|

|

||||||

|

|

||||||

01/03/22: Chinese translation by deluxghost

|

|

||||||

|

|

||||||

03/02/22: i18n enabled! I need help with the translations :/

|

|

||||||

|

|

||||||

27/01/22: Multidownload implemented!

|

|

||||||

|

|

||||||

26/01/22: Multiple downloads are being implemented. Maybe by next release they will be there.

|

|

||||||

Refactoring and JSDoc.

|

|

||||||

|

|

||||||

04/01/22: Background jobs now are retrieved!! It's still rudimentary but it leverages on yt-dlp resume feature.

|

|

||||||

|

|

||||||

05/05/22: Material UI update.

|

|

||||||

|

|

||||||

03/06/22: The most requested feature finally implemented: Format Selection!!

|

|

||||||

|

|

||||||

08/06/22: ARM builds.

|

|

||||||

|

|

||||||

28/06/22: Reworked resume download feature. Now it's pratically instantaneous. It no longer stops and restarts each process, references to each process are saved in memory.

|

|

||||||

|

|

||||||

12/01/23: Switched from TypeScript to Golang on the backend. It was a great effort but it was worth it.

|

|

||||||

```

|

|

||||||

|

|

||||||

## Settings

|

## Settings

|

||||||

|

|

||||||

The currently avaible settings are:

|

The currently avaible settings are:

|

||||||

@@ -71,23 +29,13 @@ The currently avaible settings are:

|

|||||||

- Pass custom yt-dlp arguments safely

|

- Pass custom yt-dlp arguments safely

|

||||||

- Download queue (limit concurrent downloads)

|

- Download queue (limit concurrent downloads)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Format selection

|

## Format selection

|

||||||

|

|

||||||

This feature is disabled by default as this intended to be used to retrieve the best quality automatically.

|

This feature is disabled by default as this intended to be used to retrieve the best quality automatically.

|

||||||

|

|

||||||

To enable it just go to the settings page and enable the **Enable video/audio formats selection** flag!

|

To enable it just go to the settings page and enable the **Enable video/audio formats selection** flag!

|

||||||

|

|

||||||

## Troubleshooting

|

## [Docker](https://github.com/marcopeocchi/yt-dlp-web-ui/pkgs/container/yt-dlp-web-ui) run

|

||||||

- **It says that it isn't connected/ip in the header is not defined.**

|

|

||||||

- You must set the server ip address in the settings section (gear icon).

|

|

||||||

- **The download doesn't start.**

|

|

||||||

- As before server address is not specified or simply yt-dlp process takes a lot of time to fire up. (Forking yt-dlp isn't fast especially if you have a lower-end/low-power NAS/server/desktop where the server is running)

|

|

||||||

|

|

||||||

## [Docker](https://github.com/marcopeocchi/yt-dlp-web-ui/pkgs/container/yt-dlp-web-ui) installation

|

|

||||||

## Docker run

|

|

||||||

```sh

|

```sh

|

||||||

docker pull marcobaobao/yt-dlp-webui

|

docker pull marcobaobao/yt-dlp-webui

|

||||||

docker run -d -p 3033:3033 -v <your dir>:/downloads marcobaobao/yt-dlp-webui

|

docker run -d -p 3033:3033 -v <your dir>:/downloads marcobaobao/yt-dlp-webui

|

||||||

@@ -177,7 +125,7 @@ Usage yt-dlp-webui:

|

|||||||

-port int

|

-port int

|

||||||

Port where server will listen at (default 3033)

|

Port where server will listen at (default 3033)

|

||||||

-qs int

|

-qs int

|

||||||

Download queue size (default 8)

|

Download queue size (defaults to the number of logical CPU. A min of 2 is recomended.)

|

||||||

-user string

|

-user string

|

||||||

Username required for auth

|

Username required for auth

|

||||||

-pass string

|

-pass string

|

||||||

@@ -187,6 +135,7 @@ Usage yt-dlp-webui:

|

|||||||

### Config file

|

### Config file

|

||||||

By running `yt-dlp-webui` in standalone mode you have the ability to also specify a config file.

|

By running `yt-dlp-webui` in standalone mode you have the ability to also specify a config file.

|

||||||

The config file **will overwrite what have been passed as cli argument**.

|

The config file **will overwrite what have been passed as cli argument**.

|

||||||

|

With Docker, inside the mounted `/conf` volume inside there must be a file named `config.yml`.

|

||||||

|

|

||||||

```yaml

|

```yaml

|

||||||

# Simple configuration file for yt-dlp webui

|

# Simple configuration file for yt-dlp webui

|

||||||

@@ -284,17 +233,17 @@ Want to build your own frontend? We got you covered 🤠

|

|||||||

`yt-dlp-webui` now exposes a nice **JSON-RPC 1.0** interface through Websockets and HTTP-POST

|

`yt-dlp-webui` now exposes a nice **JSON-RPC 1.0** interface through Websockets and HTTP-POST

|

||||||

It is **planned** to also expose a **gRPC** server.

|

It is **planned** to also expose a **gRPC** server.

|

||||||

|

|

||||||

Just as an overview, these are the available methods:

|

|

||||||

- Service.Exec

|

|

||||||

- Service.Progress

|

|

||||||

- Service.Formats

|

|

||||||

- Service.Pending

|

|

||||||

- Service.Running

|

|

||||||

- Service.Kill

|

|

||||||

- Service.KillAll

|

|

||||||

- Service.Clear

|

|

||||||

|

|

||||||

For more information open an issue on GitHub and I will provide more info ASAP.

|

For more information open an issue on GitHub and I will provide more info ASAP.

|

||||||

|

|

||||||

|

## Nix

|

||||||

|

This repo adds support for Nix(OS) in various ways through a `flake-parts` flake.

|

||||||

|

For more info, please refer to the [official documentation](https://nixos.org/learn/).

|

||||||

|

|

||||||

## What yt-dlp-webui is not

|

## What yt-dlp-webui is not

|

||||||

`yt-dlp-webui` isn't your ordinary website where to download stuff from the internet, so don't try asking for links of where this is hosted. It's a self hosted platform for a Linux NAS.

|

`yt-dlp-webui` isn't your ordinary website where to download stuff from the internet, so don't try asking for links of where this is hosted. It's a self hosted platform for a Linux NAS.

|

||||||

|

|

||||||

|

## Troubleshooting

|

||||||

|

- **It says that it isn't connected/ip in the header is not defined.**

|

||||||

|

- You must set the server ip address in the settings section (gear icon).

|

||||||

|

- **The download doesn't start.**

|

||||||

|

- As before server address is not specified or simply yt-dlp process takes a lot of time to fire up. (Forking yt-dlp isn't fast especially if you have a lower-end/low-power NAS/server/desktop where the server is running)

|

||||||

|

|||||||

4

env.nix

4

env.nix

@@ -1,4 +0,0 @@

|

|||||||

{ pkgs ? import <nixpkgs> {} }:

|

|

||||||

pkgs.mkShell {

|

|

||||||

nativeBuildInputs = with pkgs.buildPackages; [ yt-dlp nodejs_22 yarn-berry go ];

|

|

||||||

}

|

|

||||||

149

flake.lock

generated

Normal file

149

flake.lock

generated

Normal file

@@ -0,0 +1,149 @@

|

|||||||

|

{

|

||||||

|

"nodes": {

|

||||||

|

"flake-compat": {

|

||||||

|

"flake": false,

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1696426674,

|

||||||

|

"narHash": "sha256-kvjfFW7WAETZlt09AgDn1MrtKzP7t90Vf7vypd3OL1U=",

|

||||||

|

"owner": "edolstra",

|

||||||

|

"repo": "flake-compat",

|

||||||

|

"rev": "0f9255e01c2351cc7d116c072cb317785dd33b33",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "edolstra",

|

||||||

|

"repo": "flake-compat",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"flake-parts": {

|

||||||

|

"inputs": {

|

||||||

|

"nixpkgs-lib": "nixpkgs-lib"

|

||||||

|

},

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1722555600,

|

||||||

|

"narHash": "sha256-XOQkdLafnb/p9ij77byFQjDf5m5QYl9b2REiVClC+x4=",

|

||||||

|

"owner": "hercules-ci",

|

||||||

|

"repo": "flake-parts",

|

||||||

|

"rev": "8471fe90ad337a8074e957b69ca4d0089218391d",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "hercules-ci",

|

||||||

|

"repo": "flake-parts",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"gitignore": {

|

||||||

|

"inputs": {

|

||||||

|

"nixpkgs": [

|

||||||

|

"pre-commit-hooks-nix",

|

||||||

|

"nixpkgs"

|

||||||

|

]

|

||||||

|

},

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1709087332,

|

||||||

|

"narHash": "sha256-HG2cCnktfHsKV0s4XW83gU3F57gaTljL9KNSuG6bnQs=",

|

||||||

|

"owner": "hercules-ci",

|

||||||

|

"repo": "gitignore.nix",

|

||||||

|

"rev": "637db329424fd7e46cf4185293b9cc8c88c95394",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "hercules-ci",

|

||||||

|

"repo": "gitignore.nix",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"nixpkgs": {

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1723637854,

|

||||||

|

"narHash": "sha256-med8+5DSWa2UnOqtdICndjDAEjxr5D7zaIiK4pn0Q7c=",

|

||||||

|

"owner": "NixOS",

|

||||||

|

"repo": "nixpkgs",

|

||||||

|

"rev": "c3aa7b8938b17aebd2deecf7be0636000d62a2b9",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "NixOS",

|

||||||

|

"ref": "nixos-unstable",

|

||||||

|

"repo": "nixpkgs",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"nixpkgs-lib": {

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1722555339,

|

||||||

|

"narHash": "sha256-uFf2QeW7eAHlYXuDktm9c25OxOyCoUOQmh5SZ9amE5Q=",

|

||||||

|

"type": "tarball",

|

||||||

|

"url": "https://github.com/NixOS/nixpkgs/archive/a5d394176e64ab29c852d03346c1fc9b0b7d33eb.tar.gz"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"type": "tarball",

|

||||||

|

"url": "https://github.com/NixOS/nixpkgs/archive/a5d394176e64ab29c852d03346c1fc9b0b7d33eb.tar.gz"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"nixpkgs-stable": {

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1720386169,

|

||||||

|

"narHash": "sha256-NGKVY4PjzwAa4upkGtAMz1npHGoRzWotlSnVlqI40mo=",

|

||||||

|

"owner": "NixOS",

|

||||||

|

"repo": "nixpkgs",

|

||||||

|

"rev": "194846768975b7ad2c4988bdb82572c00222c0d7",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "NixOS",

|

||||||

|

"ref": "nixos-24.05",

|

||||||

|

"repo": "nixpkgs",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"nixpkgs_2": {

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1719082008,

|

||||||

|

"narHash": "sha256-jHJSUH619zBQ6WdC21fFAlDxHErKVDJ5fpN0Hgx4sjs=",

|

||||||

|

"owner": "NixOS",

|

||||||

|

"repo": "nixpkgs",

|

||||||

|

"rev": "9693852a2070b398ee123a329e68f0dab5526681",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "NixOS",

|

||||||

|

"ref": "nixpkgs-unstable",

|

||||||

|

"repo": "nixpkgs",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"pre-commit-hooks-nix": {

|

||||||

|

"inputs": {

|

||||||

|

"flake-compat": "flake-compat",

|

||||||

|

"gitignore": "gitignore",

|

||||||

|

"nixpkgs": "nixpkgs_2",

|

||||||

|

"nixpkgs-stable": "nixpkgs-stable"

|

||||||

|

},

|

||||||

|

"locked": {

|

||||||

|

"lastModified": 1723803910,

|

||||||

|

"narHash": "sha256-yezvUuFiEnCFbGuwj/bQcqg7RykIEqudOy/RBrId0pc=",

|

||||||

|

"owner": "cachix",

|

||||||

|

"repo": "pre-commit-hooks.nix",

|

||||||

|

"rev": "bfef0ada09e2c8ac55bbcd0831bd0c9d42e651ba",

|

||||||

|

"type": "github"

|

||||||

|

},

|

||||||

|

"original": {

|

||||||

|

"owner": "cachix",

|

||||||

|

"repo": "pre-commit-hooks.nix",

|

||||||

|

"type": "github"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"root": {

|

||||||

|

"inputs": {

|

||||||

|

"flake-parts": "flake-parts",

|

||||||

|

"nixpkgs": "nixpkgs",

|

||||||

|

"pre-commit-hooks-nix": "pre-commit-hooks-nix"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"root": "root",

|

||||||

|

"version": 7

|

||||||

|

}

|

||||||

51

flake.nix

Normal file

51

flake.nix

Normal file

@@ -0,0 +1,51 @@

|

|||||||

|

{

|

||||||

|

description = "A terrible web ui for yt-dlp. Designed to be self-hosted.";

|

||||||

|

|

||||||

|

inputs = {

|

||||||

|

flake-parts.url = "github:hercules-ci/flake-parts";

|

||||||

|

nixpkgs.url = "github:NixOS/nixpkgs/nixos-unstable";

|

||||||

|

pre-commit-hooks-nix.url = "github:cachix/pre-commit-hooks.nix";

|

||||||

|

};

|

||||||

|

|

||||||

|

outputs = inputs@{ self, flake-parts, ... }:

|

||||||

|

flake-parts.lib.mkFlake { inherit inputs; } {

|

||||||

|

imports = [

|

||||||

|

inputs.pre-commit-hooks-nix.flakeModule

|

||||||

|

];

|

||||||

|

systems = [

|

||||||

|

"x86_64-linux"

|

||||||

|

];

|

||||||

|

perSystem = { config, self', pkgs, ... }: {

|

||||||

|

|

||||||

|

packages = {

|

||||||

|

yt-dlp-web-ui-frontend = pkgs.callPackage ./nix/frontend.nix { };

|

||||||

|

default = pkgs.callPackage ./nix/server.nix {

|

||||||

|

inherit (self'.packages) yt-dlp-web-ui-frontend;

|

||||||

|

};

|

||||||

|

};

|

||||||

|

|

||||||

|

checks = import ./nix/tests { inherit self pkgs; };

|

||||||

|

|

||||||

|

pre-commit = {

|

||||||

|

check.enable = true;

|

||||||

|

settings = {

|

||||||

|

hooks = {

|

||||||

|

${self'.formatter.pname}.enable = true;

|

||||||

|

deadnix.enable = true;

|

||||||

|

nil.enable = true;

|

||||||

|

statix.enable = true;

|

||||||

|

};

|

||||||

|

};

|

||||||

|

};

|

||||||

|

|

||||||

|

devShells.default = pkgs.callPackage ./nix/devShell.nix {

|

||||||

|

inputsFrom = [ config.pre-commit.devShell ];

|

||||||

|

};

|

||||||

|

|

||||||

|

formatter = pkgs.nixpkgs-fmt;

|

||||||

|

};

|

||||||

|

flake = {

|

||||||

|

nixosModules.default = import ./nix/module.nix self.packages;

|

||||||

|

};

|

||||||

|

};

|

||||||

|

}

|

||||||

@@ -63,6 +63,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

german:

|

german:

|

||||||

urlInput: Video URL

|

urlInput: Video URL

|

||||||

@@ -123,6 +124,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

french:

|

french:

|

||||||

urlInput: URL vidéo de YouTube ou d'un autre service pris en charge

|

urlInput: URL vidéo de YouTube ou d'un autre service pris en charge

|

||||||

@@ -185,6 +187,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

italian:

|

italian:

|

||||||

urlInput: URL Video (uno per linea)

|

urlInput: URL Video (uno per linea)

|

||||||

@@ -244,6 +247,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

chinese:

|

chinese:

|

||||||

urlInput: 视频 URL

|

urlInput: 视频 URL

|

||||||

@@ -294,17 +298,18 @@ languages:

|

|||||||

templatesEditorContentLabel: 模板内容

|

templatesEditorContentLabel: 模板内容

|

||||||

logsTitle: '日志'

|

logsTitle: '日志'

|

||||||

awaitingLogs: '正在等待日志…'

|

awaitingLogs: '正在等待日志…'

|

||||||

bulkDownload: 'Download files in a zip archive'

|

bulkDownload: '下载 zip 压缩包中的文件'

|

||||||

livestreamURLInput: Livestream URL

|

livestreamURLInput: 直播 URL

|

||||||

livestreamStatusWaiting: Waiting/Wait start

|

livestreamStatusWaiting: 等待直播开始

|

||||||

livestreamStatusDownloading: Downloading

|

livestreamStatusDownloading: 下载中

|

||||||

livestreamStatusCompleted: Completed

|

livestreamStatusCompleted: 已完成

|

||||||

livestreamStatusErrored: Errored

|

livestreamStatusErrored: 发生错误

|

||||||

livestreamStatusUnknown: Unknown

|

livestreamStatusUnknown: 未知

|

||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

本功能将会监控即将开始的直播流,每个进程都会传入参数:--wait-for-video 10 (重试间隔10秒)

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

如果直播已经开始,那么依然可以下载,但是不会记录下载进度。

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

直播开始后,将会转移到下载页面

|

||||||

|

livestreamExperimentalWarning: 实验性功能,可能存在未知Bug,请谨慎使用

|

||||||

spanish:

|

spanish:

|

||||||

urlInput: URL de YouTube u otro servicio compatible

|

urlInput: URL de YouTube u otro servicio compatible

|

||||||

statusTitle: Estado

|

statusTitle: Estado

|

||||||

@@ -362,6 +367,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

russian:

|

russian:

|

||||||

urlInput: URL-адрес YouTube или любого другого поддерживаемого сервиса

|

urlInput: URL-адрес YouTube или любого другого поддерживаемого сервиса

|

||||||

@@ -420,6 +426,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

korean:

|

korean:

|

||||||

urlInput: YouTube나 다른 지원되는 사이트의 URL

|

urlInput: YouTube나 다른 지원되는 사이트의 URL

|

||||||

@@ -478,6 +485,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

japanese:

|

japanese:

|

||||||

urlInput: YouTubeまたはサポート済み動画のURL

|

urlInput: YouTubeまたはサポート済み動画のURL

|

||||||

@@ -537,6 +545,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

catalan:

|

catalan:

|

||||||

urlInput: URL de YouTube o d'un altre servei compatible

|

urlInput: URL de YouTube o d'un altre servei compatible

|

||||||

@@ -595,6 +604,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

ukrainian:

|

ukrainian:

|

||||||

urlInput: URL-адреса YouTube або будь-якого іншого підтримуваного сервісу

|

urlInput: URL-адреса YouTube або будь-якого іншого підтримуваного сервісу

|

||||||

@@ -653,6 +663,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

polish:

|

polish:

|

||||||

urlInput: Adres URL YouTube lub innej obsługiwanej usługi

|

urlInput: Adres URL YouTube lub innej obsługiwanej usługi

|

||||||

@@ -711,6 +722,7 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

swedish:

|

swedish:

|

||||||

urlInput: Videolänk (en per rad)

|

urlInput: Videolänk (en per rad)

|

||||||

@@ -775,4 +787,5 @@ languages:

|

|||||||

livestreamDownloadInfo: |

|

livestreamDownloadInfo: |

|

||||||

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

This will monitor yet to start livestream. Each process will be executed with --wait-for-video 10.

|

||||||

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

If an already started livestream is provided it will be still downloaded but its progress will not be tracked.

|

||||||

|

Once started the livestream will be migrated to the downloads page.

|

||||||

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

livestreamExperimentalWarning: This feature is still experimental. Something might break!

|

||||||

|

|||||||

@@ -1,16 +1,15 @@

|

|||||||

import { atom, selector } from 'recoil'

|

|

||||||

import { CustomTemplate } from '../types'

|

|

||||||

import { ffetch } from '../lib/httpClient'

|

|

||||||

import { serverURL } from './settings'

|

|

||||||

import { pipe } from 'fp-ts/lib/function'

|

|

||||||

import { getOrElse } from 'fp-ts/lib/Either'

|

import { getOrElse } from 'fp-ts/lib/Either'

|

||||||

|

import { pipe } from 'fp-ts/lib/function'

|

||||||

|

import { atom, selector } from 'recoil'

|

||||||

|

import { ffetch } from '../lib/httpClient'

|

||||||

|

import { CustomTemplate } from '../types'

|

||||||

|

import { serverSideCookiesState, serverURL } from './settings'

|

||||||

|

|

||||||

export const cookiesTemplateState = atom({

|

export const cookiesTemplateState = selector({

|

||||||

key: 'cookiesTemplateState',

|

key: 'cookiesTemplateState',

|

||||||

default: localStorage.getItem('cookiesTemplate') ?? '',

|

get: ({ get }) => get(serverSideCookiesState)

|

||||||

effects: [

|

? '--cookies=cookies.txt'

|

||||||

({ onSet }) => onSet(e => localStorage.setItem('cookiesTemplate', e))

|

: ''

|

||||||

]

|

|

||||||

})

|

})

|

||||||

|

|

||||||

export const customArgsState = atom({

|

export const customArgsState = atom({

|

||||||

|

|||||||

@@ -1,4 +1,7 @@

|

|||||||

|

import { pipe } from 'fp-ts/lib/function'

|

||||||

|

import { matchW } from 'fp-ts/lib/TaskEither'

|

||||||

import { atom, selector } from 'recoil'

|

import { atom, selector } from 'recoil'

|

||||||

|

import { ffetch } from '../lib/httpClient'

|

||||||

import { prefersDarkMode } from '../utils'

|

import { prefersDarkMode } from '../utils'

|

||||||

|

|

||||||

export const languages = [

|

export const languages = [

|

||||||

@@ -187,13 +190,15 @@ export const rpcHTTPEndpoint = selector({

|

|||||||

}

|

}

|

||||||

})

|

})

|

||||||

|

|

||||||

export const cookiesState = atom({

|

export const serverSideCookiesState = selector<string>({

|

||||||

key: 'cookiesState',

|

key: 'serverSideCookiesState',

|

||||||

default: localStorage.getItem('yt-dlp-cookies') ?? '',

|

get: async ({ get }) => await pipe(

|

||||||

effects: [

|

ffetch<Readonly<{ cookies: string }>>(`${get(serverURL)}/api/v1/cookies`),

|

||||||

({ onSet }) =>

|

matchW(

|

||||||

onSet(c => localStorage.setItem('yt-dlp-cookies', c))

|

() => '',

|

||||||

]

|

(r) => r.cookies

|

||||||

|

)

|

||||||

|

)()

|

||||||

})

|

})

|

||||||

|

|

||||||

const themeSelector = selector<ThemeNarrowed>({

|

const themeSelector = selector<ThemeNarrowed>({

|

||||||

|

|||||||

@@ -1,5 +1,10 @@

|

|||||||

|

import { pipe } from 'fp-ts/lib/function'

|

||||||

|

import { of } from 'fp-ts/lib/Task'

|

||||||

|

import { getOrElse } from 'fp-ts/lib/TaskEither'

|

||||||

import { atom, selector } from 'recoil'

|

import { atom, selector } from 'recoil'

|

||||||

|

import { ffetch } from '../lib/httpClient'

|

||||||

import { rpcClientState } from './rpc'

|

import { rpcClientState } from './rpc'

|

||||||

|

import { serverURL } from './settings'

|

||||||

|

|

||||||

export const connectedState = atom({

|

export const connectedState = atom({

|

||||||

key: 'connectedState',

|

key: 'connectedState',

|

||||||

@@ -23,3 +28,14 @@ export const availableDownloadPathsState = selector({

|

|||||||

return res.result

|

return res.result

|

||||||

}

|

}

|

||||||

})

|

})

|

||||||

|

|

||||||

|

export const ytdlpVersionState = selector<string>({

|

||||||

|

key: 'ytdlpVersionState',

|

||||||

|

get: async ({ get }) => await pipe(

|

||||||

|

ffetch<string>(`${get(serverURL)}/api/v1/version`),

|

||||||

|

getOrElse(() => pipe(

|

||||||

|

'unknown version',

|

||||||

|

of

|

||||||

|

)),

|

||||||

|

)()

|

||||||

|

})

|

||||||

@@ -1,22 +1,20 @@

|

|||||||

import { TextField } from '@mui/material'

|

import { Button, TextField } from '@mui/material'

|

||||||

import * as A from 'fp-ts/Array'

|

import * as A from 'fp-ts/Array'

|

||||||

import * as E from 'fp-ts/Either'

|

import * as E from 'fp-ts/Either'

|

||||||

import * as O from 'fp-ts/Option'

|

import * as O from 'fp-ts/Option'

|

||||||

|

import { matchW } from 'fp-ts/lib/TaskEither'

|

||||||

import { pipe } from 'fp-ts/lib/function'

|

import { pipe } from 'fp-ts/lib/function'

|

||||||

import { useMemo } from 'react'

|

import { useMemo } from 'react'

|

||||||

import { useRecoilState, useRecoilValue } from 'recoil'

|

import { useRecoilValue } from 'recoil'

|

||||||

import { Subject, debounceTime, distinctUntilChanged } from 'rxjs'

|

import { Subject, debounceTime, distinctUntilChanged } from 'rxjs'

|

||||||

import { cookiesTemplateState } from '../atoms/downloadTemplate'

|

import { serverSideCookiesState, serverURL } from '../atoms/settings'

|

||||||

import { cookiesState, serverURL } from '../atoms/settings'

|

|

||||||

import { useSubscription } from '../hooks/observable'

|

import { useSubscription } from '../hooks/observable'

|

||||||

import { useToast } from '../hooks/toast'

|

import { useToast } from '../hooks/toast'

|

||||||

import { ffetch } from '../lib/httpClient'

|

import { ffetch } from '../lib/httpClient'

|

||||||

|

|

||||||

const validateCookie = (cookie: string) => pipe(

|

const validateCookie = (cookie: string) => pipe(

|

||||||

cookie,

|

cookie,

|

||||||

cookie => cookie.replace(/\s\s+/g, ' '),

|

cookie => cookie.split('\t'),

|

||||||

cookie => cookie.replaceAll('\t', ' '),

|

|

||||||

cookie => cookie.split(' '),

|

|

||||||

E.of,

|

E.of,

|

||||||

E.flatMap(

|

E.flatMap(

|

||||||

E.fromPredicate(

|

E.fromPredicate(

|

||||||

@@ -68,13 +66,19 @@ const validateCookie = (cookie: string) => pipe(

|

|||||||

),

|

),

|

||||||

)

|

)

|

||||||

|

|

||||||

|

const noopValidator = (s: string): E.Either<string, string[]> => pipe(

|

||||||

|

s,

|

||||||

|

s => s.split('\t'),

|

||||||

|

E.of

|

||||||

|

)

|

||||||

|

|

||||||

|

const isCommentOrNewLine = (s: string) => s === '' || s.startsWith('\n') || s.startsWith('#')

|

||||||

|

|

||||||

const CookiesTextField: React.FC = () => {

|

const CookiesTextField: React.FC = () => {

|

||||||

const serverAddr = useRecoilValue(serverURL)

|

const serverAddr = useRecoilValue(serverURL)

|

||||||

const [, setCookies] = useRecoilState(cookiesTemplateState)

|

const savedCookies = useRecoilValue(serverSideCookiesState)

|

||||||

const [savedCookies, setSavedCookies] = useRecoilState(cookiesState)

|

|

||||||

|

|

||||||

const { pushMessage } = useToast()

|

const { pushMessage } = useToast()

|

||||||

const flag = '--cookies=cookies.txt'

|

|

||||||

|

|

||||||

const cookies$ = useMemo(() => new Subject<string>(), [])

|

const cookies$ = useMemo(() => new Subject<string>(), [])

|

||||||

|

|

||||||

@@ -86,28 +90,41 @@ const CookiesTextField: React.FC = () => {

|

|||||||

})

|

})

|

||||||

})()

|

})()

|

||||||

|

|

||||||

|

const deleteCookies = () => pipe(

|

||||||

|

ffetch(`${serverAddr}/api/v1/cookies`, {

|

||||||

|

method: 'DELETE',

|

||||||

|

}),

|

||||||

|

matchW(

|

||||||

|

(l) => pushMessage(l, 'error'),

|

||||||

|

(_) => {

|

||||||

|

pushMessage('Deleted cookies', 'success')

|

||||||

|

pushMessage(`Reload the page to apply the changes`, 'info')

|

||||||

|

}

|

||||||

|

)

|

||||||

|

)()

|

||||||

|

|

||||||

const validateNetscapeCookies = (cookies: string) => pipe(

|

const validateNetscapeCookies = (cookies: string) => pipe(

|

||||||

cookies,

|

cookies,

|

||||||

cookies => cookies.split('\n'),

|

cookies => cookies.split('\n'),

|

||||||

cookies => cookies.filter(f => !f.startsWith('\n')), // empty lines

|

A.map(c => isCommentOrNewLine(c) ? noopValidator(c) : validateCookie(c)), // validate line

|

||||||

cookies => cookies.filter(f => !f.startsWith('# ')), // comments

|

A.mapWithIndex((i, either) => pipe( // detect errors and return the either

|

||||||

cookies => cookies.filter(Boolean), // empty lines

|

|

||||||

A.map(validateCookie),

|

|

||||||

A.mapWithIndex((i, either) => pipe(

|

|

||||||

either,

|

either,

|

||||||

E.matchW(

|

E.match(

|

||||||

(l) => pushMessage(`Error in line ${i + 1}: ${l}`, 'warning'),

|

(l) => {

|

||||||

() => E.isRight(either)

|

pushMessage(`Error in line ${i + 1}: ${l}`, 'warning')

|

||||||

|

return either

|

||||||

|

},

|

||||||

|

(_) => either

|

||||||

),

|

),

|

||||||

)),

|

)),

|

||||||

A.filter(Boolean),

|

A.filter(c => E.isRight(c)), // filter the line who didn't pass the validation

|

||||||

A.match(

|

A.map(E.getOrElse(() => new Array<string>())), // cast the array of eithers to an array of tokens

|

||||||

() => false,

|

A.filter(f => f.length > 0), // filter the empty tokens

|

||||||

(c) => {

|

A.map(f => f.join('\t')), // join the tokens in a TAB separated string

|

||||||

pushMessage(`Valid ${c.length} Netscape cookies`, 'info')

|

A.reduce('', (c, n) => `${c}${n}\n`), // reduce all to a single string separated by \n

|

||||||

return true

|

parsed => parsed.length > 0 // if nothing has passed the validation return none

|

||||||

}

|

? O.some(parsed)

|

||||||

)

|

: O.none

|

||||||

)

|

)

|

||||||

|

|

||||||

useSubscription(

|

useSubscription(

|

||||||

@@ -117,22 +134,17 @@ const CookiesTextField: React.FC = () => {

|

|||||||

),

|

),

|

||||||

(cookies) => pipe(

|

(cookies) => pipe(

|

||||||

cookies,

|

cookies,

|

||||||

cookies => {

|

|

||||||

setSavedCookies(cookies)

|

|

||||||

return cookies

|

|

||||||

},

|

|

||||||

validateNetscapeCookies,

|

validateNetscapeCookies,

|

||||||

O.fromPredicate(f => f === true),

|

|

||||||

O.match(

|

O.match(

|

||||||

() => setCookies(''),

|

() => pushMessage('No valid cookies', 'warning'),

|

||||||

async () => {

|

async (some) => {

|

||||||

pipe(

|

pipe(

|

||||||

await submitCookies(cookies),

|

await submitCookies(some.trimEnd()),

|

||||||

E.match(

|

E.match(

|

||||||

(l) => pushMessage(`${l}`, 'error'),

|

(l) => pushMessage(`${l}`, 'error'),

|

||||||

() => {

|

() => {

|

||||||

pushMessage(`Saved Netscape cookies`, 'success')

|

pushMessage(`Saved ${some.split('\n').length} Netscape cookies`, 'success')

|

||||||

setCookies(flag)

|

pushMessage('Reload the page to apply the changes', 'info')

|

||||||

}

|

}

|

||||||

)

|

)

|

||||||

)

|

)

|

||||||

@@ -142,6 +154,7 @@ const CookiesTextField: React.FC = () => {

|

|||||||

)

|

)

|

||||||

|

|

||||||

return (

|

return (

|

||||||

|

<>

|

||||||

<TextField

|

<TextField

|

||||||

label="Netscape Cookies"

|

label="Netscape Cookies"

|

||||||

multiline

|

multiline

|

||||||

@@ -151,6 +164,8 @@ const CookiesTextField: React.FC = () => {

|

|||||||

defaultValue={savedCookies}

|

defaultValue={savedCookies}

|

||||||

onChange={(e) => cookies$.next(e.currentTarget.value)}

|

onChange={(e) => cookies$.next(e.currentTarget.value)}

|

||||||

/>

|

/>

|

||||||

|

<Button onClick={deleteCookies}>Delete cookies</Button>

|

||||||

|

</>

|

||||||

)

|

)

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|||||||

@@ -14,7 +14,7 @@ const DownloadsGridView: React.FC = () => {

|

|||||||

const { client } = useRPC()

|

const { client } = useRPC()

|

||||||

const { pushMessage } = useToast()

|

const { pushMessage } = useToast()

|

||||||

|

|

||||||

const stop = (r: RPCResult) => r.progress.process_status === ProcessStatus.Completed

|

const stop = (r: RPCResult) => r.progress.process_status === ProcessStatus.COMPLETED

|

||||||

? client.clear(r.id)

|

? client.clear(r.id)

|

||||||

: client.kill(r.id)

|

: client.kill(r.id)

|

||||||

|

|

||||||

|

|||||||

@@ -133,7 +133,7 @@ const DownloadsTableView: React.FC = () => {

|

|||||||

window.open(`${serverAddr}/archive/d/${encoded}?token=${localStorage.getItem('token')}`)

|

window.open(`${serverAddr}/archive/d/${encoded}?token=${localStorage.getItem('token')}`)

|

||||||

}

|

}

|

||||||

|

|

||||||

const stop = (r: RPCResult) => r.progress.process_status === ProcessStatus.Completed

|

const stop = (r: RPCResult) => r.progress.process_status === ProcessStatus.COMPLETED

|

||||||

? client.clear(r.id)

|

? client.clear(r.id)

|

||||||

: client.kill(r.id)

|

: client.kill(r.id)

|

||||||

|

|

||||||

|

|||||||

@@ -37,7 +37,9 @@ const Footer: React.FC = () => {

|

|||||||

<div style={{ display: 'flex', gap: 4, alignItems: 'center' }}>

|

<div style={{ display: 'flex', gap: 4, alignItems: 'center' }}>

|

||||||

{/* TODO: make it dynamic */}

|

{/* TODO: make it dynamic */}

|

||||||

<Chip label="RPC v3.2.0" variant="outlined" size="small" />

|

<Chip label="RPC v3.2.0" variant="outlined" size="small" />

|

||||||

|

<Suspense>

|

||||||

<VersionIndicator />

|

<VersionIndicator />

|

||||||

|

</Suspense>

|

||||||

</div>

|

</div>

|

||||||

<div style={{ display: 'flex', gap: 4, 'alignItems': 'center' }}>

|

<div style={{ display: 'flex', gap: 4, 'alignItems': 'center' }}>

|

||||||

<div style={{

|

<div style={{

|

||||||

|

|||||||

@@ -101,6 +101,7 @@ export default function FormatsGrid({

|

|||||||

>

|

>

|

||||||

{format.format_note} - {format.vcodec === 'none' ? format.acodec : format.vcodec}

|

{format.format_note} - {format.vcodec === 'none' ? format.acodec : format.vcodec}

|

||||||

{(format.filesize_approx > 0) ? " (~" + Math.round(format.filesize_approx / 1024 / 1024) + " MiB)" : ""}

|

{(format.filesize_approx > 0) ? " (~" + Math.round(format.filesize_approx / 1024 / 1024) + " MiB)" : ""}

|

||||||

|

{format.language}

|

||||||

</Button>

|

</Button>

|

||||||

</Grid>

|

</Grid>

|

||||||

))

|

))

|

||||||

|

|||||||

@@ -1,32 +1,9 @@

|

|||||||

import { Chip, CircularProgress } from '@mui/material'

|

import { Chip, CircularProgress } from '@mui/material'

|

||||||

import { useEffect, useState } from 'react'

|

|

||||||

import { useRecoilValue } from 'recoil'

|

import { useRecoilValue } from 'recoil'

|

||||||

import { serverURL } from '../atoms/settings'

|

import { ytdlpVersionState } from '../atoms/status'

|

||||||

import { useToast } from '../hooks/toast'

|

|

||||||

|

|

||||||

const VersionIndicator: React.FC = () => {

|

const VersionIndicator: React.FC = () => {

|

||||||

const serverAddr = useRecoilValue(serverURL)

|

const version = useRecoilValue(ytdlpVersionState)

|

||||||

|

|

||||||

const [version, setVersion] = useState('')

|

|

||||||

const { pushMessage } = useToast()

|

|

||||||

|

|

||||||

const fetchVersion = async () => {

|

|

||||||

const res = await fetch(`${serverAddr}/api/v1/version`, {

|

|

||||||

headers: {

|

|

||||||

'X-Authentication': localStorage.getItem('token') ?? ''

|

|

||||||

}

|

|

||||||

})

|

|

||||||

|

|

||||||

if (!res.ok) {

|

|

||||||

return pushMessage(await res.text(), 'error')

|

|

||||||

}

|

|

||||||

|

|

||||||

setVersion(await res.json())

|

|

||||||

}

|

|

||||||

|

|

||||||

useEffect(() => {

|

|

||||||

fetchVersion()

|

|

||||||

}, [])

|

|

||||||

|

|

||||||

return (

|

return (

|

||||||

version

|

version

|

||||||

|

|||||||

@@ -82,7 +82,9 @@ export class RPCClient {

|

|||||||

: ''

|

: ''

|

||||||

|

|

||||||

const sanitizedArgs = this.argsSanitizer(

|

const sanitizedArgs = this.argsSanitizer(

|

||||||

req.args.replace('-o', '').replace(rename, '')

|

req.args

|

||||||

|

.replace('-o', '')

|

||||||

|

.replace(rename, '')

|

||||||

)

|

)

|

||||||

|

|

||||||

if (req.playlist) {

|

if (req.playlist) {

|

||||||

@@ -177,14 +179,14 @@ export class RPCClient {

|

|||||||

}

|

}

|

||||||

|

|

||||||

public killLivestream(url: string) {

|

public killLivestream(url: string) {

|

||||||

return this.sendHTTP<LiveStreamProgress>({

|

return this.sendHTTP({

|

||||||

method: 'Service.KillLivestream',

|

method: 'Service.KillLivestream',

|

||||||

params: [url]

|

params: [url]

|

||||||

})

|

})

|

||||||

}

|

}

|

||||||

|

|

||||||

public killAllLivestream() {

|

public killAllLivestream() {

|

||||||

return this.sendHTTP<LiveStreamProgress>({

|

return this.sendHTTP({

|

||||||

method: 'Service.KillAllLivestream',

|

method: 'Service.KillAllLivestream',

|

||||||

params: []

|

params: []

|

||||||

})

|

})

|

||||||

|

|||||||

@@ -39,10 +39,11 @@ type DownloadInfo = {

|

|||||||

}

|

}

|

||||||

|

|

||||||

export enum ProcessStatus {

|

export enum ProcessStatus {

|

||||||

Pending = 0,

|

PENDING = 0,

|

||||||

Downloading,

|

DOWNLOADING,

|

||||||

Completed,

|

COMPLETED,

|

||||||

Errored,

|

ERRORED,

|

||||||

|

LIVESTREAM,

|

||||||

}

|

}

|

||||||

|

|

||||||

type DownloadProgress = {

|

type DownloadProgress = {

|

||||||

@@ -81,6 +82,7 @@ export type DLFormat = {

|

|||||||

vcodec: string

|

vcodec: string

|

||||||

acodec: string

|

acodec: string

|

||||||

filesize_approx: number

|

filesize_approx: number

|

||||||

|

language: string

|

||||||

}

|

}

|

||||||

|

|

||||||

export type DirectoryEntry = {

|

export type DirectoryEntry = {

|

||||||

@@ -110,7 +112,7 @@ export enum LiveStreamStatus {

|

|||||||

}

|

}

|

||||||

|

|

||||||

export type LiveStreamProgress = Record<string, {

|

export type LiveStreamProgress = Record<string, {

|

||||||

Status: LiveStreamStatus

|

status: LiveStreamStatus

|

||||||

WaitTime: string

|

waitTime: string

|

||||||

LiveDate: string

|

liveDate: string

|

||||||

}>

|

}>

|

||||||

@@ -56,14 +56,16 @@ export function isRPCResponse(object: any): object is RPCResponse<any> {

|

|||||||

|

|

||||||

export function mapProcessStatus(status: ProcessStatus) {

|

export function mapProcessStatus(status: ProcessStatus) {

|

||||||

switch (status) {

|

switch (status) {

|

||||||

case ProcessStatus.Pending:

|

case ProcessStatus.PENDING:

|

||||||

return 'Pending'

|

return 'Pending'

|

||||||

case ProcessStatus.Downloading:

|

case ProcessStatus.DOWNLOADING:

|

||||||

return 'Downloading'

|

return 'Downloading'

|

||||||

case ProcessStatus.Completed:

|

case ProcessStatus.COMPLETED:

|

||||||

return 'Completed'

|

return 'Completed'

|

||||||

case ProcessStatus.Errored:

|

case ProcessStatus.ERRORED:

|

||||||

return 'Error'

|

return 'Error'

|

||||||

|

case ProcessStatus.LIVESTREAM:

|

||||||

|

return 'Livestream'

|

||||||

default:

|

default:

|

||||||

return 'Pending'

|

return 'Pending'

|

||||||

}

|

}

|

||||||

|

|||||||

@@ -101,17 +101,17 @@ const LiveStreamMonitorView: React.FC = () => {

|

|||||||

>

|

>

|

||||||

<TableCell>{k}</TableCell>

|

<TableCell>{k}</TableCell>

|

||||||

<TableCell align='right'>

|

<TableCell align='right'>

|

||||||

{mapStatusToChip(progress[k].Status)}

|

{mapStatusToChip(progress[k].status)}

|

||||||

</TableCell>

|

</TableCell>

|

||||||

<TableCell align='right'>

|

<TableCell align='right'>

|

||||||

{progress[k].Status === LiveStreamStatus.WAITING

|

{progress[k].status === LiveStreamStatus.WAITING

|

||||||

? formatMicro(Number(progress[k].WaitTime))

|

? formatMicro(Number(progress[k].waitTime))

|

||||||

: "-"

|

: "-"

|

||||||

}

|

}

|

||||||

</TableCell>

|

</TableCell>

|

||||||

<TableCell align='right'>

|

<TableCell align='right'>

|

||||||

{progress[k].Status === LiveStreamStatus.WAITING

|

{progress[k].status === LiveStreamStatus.WAITING

|

||||||

? new Date(progress[k].LiveDate).toLocaleString()

|

? new Date(progress[k].liveDate).toLocaleString()

|

||||||

: "-"

|

: "-"

|

||||||

}

|

}

|

||||||

</TableCell>

|

</TableCell>

|

||||||

|

|||||||

@@ -18,7 +18,7 @@ import {

|

|||||||

Typography,

|

Typography,

|

||||||

capitalize

|

capitalize

|

||||||

} from '@mui/material'

|

} from '@mui/material'

|

||||||

import { useEffect, useMemo, useState } from 'react'

|

import { Suspense, useEffect, useMemo, useState } from 'react'

|

||||||

import { useRecoilState } from 'recoil'

|

import { useRecoilState } from 'recoil'

|

||||||

import {

|

import {

|

||||||

Subject,

|

Subject,

|

||||||

@@ -347,7 +347,9 @@ export default function Settings() {

|

|||||||

<Typography variant="h6" color="primary" sx={{ mb: 2 }}>

|

<Typography variant="h6" color="primary" sx={{ mb: 2 }}>

|

||||||

Cookies

|

Cookies

|

||||||

</Typography>

|

</Typography>

|

||||||

|

<Suspense>

|

||||||

<CookiesTextField />

|

<CookiesTextField />

|

||||||

|

</Suspense>

|

||||||

</Grid>

|

</Grid>

|

||||||

<Grid>

|

<Grid>

|

||||||

<Stack direction="row">

|

<Stack direction="row">

|

||||||

|

|||||||

16

go.mod

16

go.mod

@@ -1,6 +1,6 @@

|

|||||||

module github.com/marcopeocchi/yt-dlp-web-ui

|

module github.com/marcopeocchi/yt-dlp-web-ui

|

||||||

|

|

||||||

go 1.22

|

go 1.23

|

||||||

|

|

||||||

require (

|

require (

|

||||||

github.com/asaskevich/EventBus v0.0.0-20200907212545-49d423059eef

|

github.com/asaskevich/EventBus v0.0.0-20200907212545-49d423059eef

|

||||||

@@ -11,11 +11,11 @@ require (

|

|||||||

github.com/google/uuid v1.6.0

|

github.com/google/uuid v1.6.0

|

||||||

github.com/gorilla/websocket v1.5.3

|

github.com/gorilla/websocket v1.5.3

|

||||||

github.com/reactivex/rxgo/v2 v2.5.0

|

github.com/reactivex/rxgo/v2 v2.5.0

|

||||||

golang.org/x/oauth2 v0.21.0

|

golang.org/x/oauth2 v0.23.0

|

||||||

golang.org/x/sync v0.7.0

|

golang.org/x/sync v0.8.0

|

||||||

golang.org/x/sys v0.22.0

|

golang.org/x/sys v0.25.0

|

||||||

gopkg.in/yaml.v3 v3.0.1

|

gopkg.in/yaml.v3 v3.0.1

|

||||||

modernc.org/sqlite v1.31.1

|

modernc.org/sqlite v1.32.0

|

||||||

)

|

)

|

||||||

|

|

||||||

require (

|

require (

|

||||||

@@ -32,9 +32,9 @@ require (

|

|||||||

github.com/stretchr/objx v0.5.2 // indirect

|

github.com/stretchr/objx v0.5.2 // indirect

|

||||||

github.com/stretchr/testify v1.9.0 // indirect

|

github.com/stretchr/testify v1.9.0 // indirect

|

||||||

github.com/teivah/onecontext v1.3.0 // indirect

|

github.com/teivah/onecontext v1.3.0 // indirect

|

||||||

golang.org/x/crypto v0.25.0 // indirect

|

golang.org/x/crypto v0.26.0 // indirect

|

||||||

modernc.org/gc/v3 v3.0.0-20240722195230-4a140ff9c08e // indirect

|

modernc.org/gc/v3 v3.0.0-20240801135723-a856999a2e4a // indirect

|

||||||

modernc.org/libc v1.55.7 // indirect

|

modernc.org/libc v1.60.1 // indirect

|

||||||

modernc.org/mathutil v1.6.0 // indirect

|

modernc.org/mathutil v1.6.0 // indirect

|

||||||

modernc.org/memory v1.8.0 // indirect

|

modernc.org/memory v1.8.0 // indirect

|

||||||

modernc.org/strutil v1.2.0 // indirect

|

modernc.org/strutil v1.2.0 // indirect

|

||||||

|

|||||||

55

go.sum

55

go.sum

@@ -17,8 +17,6 @@ github.com/go-chi/chi/v5 v5.1.0 h1:acVI1TYaD+hhedDJ3r54HyA6sExp3HfXq7QWEEY/xMw=

|

|||||||

github.com/go-chi/chi/v5 v5.1.0/go.mod h1:DslCQbL2OYiznFReuXYUmQ2hGd1aDpCnlMNITLSKoi8=

|

github.com/go-chi/chi/v5 v5.1.0/go.mod h1:DslCQbL2OYiznFReuXYUmQ2hGd1aDpCnlMNITLSKoi8=

|

||||||

github.com/go-chi/cors v1.2.1 h1:xEC8UT3Rlp2QuWNEr4Fs/c2EAGVKBwy/1vHx3bppil4=

|

github.com/go-chi/cors v1.2.1 h1:xEC8UT3Rlp2QuWNEr4Fs/c2EAGVKBwy/1vHx3bppil4=

|

||||||

github.com/go-chi/cors v1.2.1/go.mod h1:sSbTewc+6wYHBBCW7ytsFSn836hqM7JxpglAy2Vzc58=

|

github.com/go-chi/cors v1.2.1/go.mod h1:sSbTewc+6wYHBBCW7ytsFSn836hqM7JxpglAy2Vzc58=

|

||||||

github.com/go-jose/go-jose/v4 v4.0.3 h1:o8aphO8Hv6RPmH+GfzVuyf7YXSBibp+8YyHdOoDESGo=

|

|

||||||

github.com/go-jose/go-jose/v4 v4.0.3/go.mod h1:NKb5HO1EZccyMpiZNbdUw/14tiXNyUJh188dfnMCAfc=

|

|

||||||

github.com/go-jose/go-jose/v4 v4.0.4 h1:VsjPI33J0SB9vQM6PLmNjoHqMQNGPiZ0rHL7Ni7Q6/E=

|

github.com/go-jose/go-jose/v4 v4.0.4 h1:VsjPI33J0SB9vQM6PLmNjoHqMQNGPiZ0rHL7Ni7Q6/E=

|

||||||

github.com/go-jose/go-jose/v4 v4.0.4/go.mod h1:NKb5HO1EZccyMpiZNbdUw/14tiXNyUJh188dfnMCAfc=

|

github.com/go-jose/go-jose/v4 v4.0.4/go.mod h1:NKb5HO1EZccyMpiZNbdUw/14tiXNyUJh188dfnMCAfc=

|

||||||

github.com/golang-jwt/jwt/v5 v5.2.1 h1:OuVbFODueb089Lh128TAcimifWaLhJwVflnrgM17wHk=

|

github.com/golang-jwt/jwt/v5 v5.2.1 h1:OuVbFODueb089Lh128TAcimifWaLhJwVflnrgM17wHk=

|

||||||

@@ -61,31 +59,33 @@ github.com/teivah/onecontext v1.3.0/go.mod h1:hoW1nmdPVK/0jrvGtcx8sCKYs2PiS4z0zz

|

|||||||

go.uber.org/goleak v1.1.10 h1:z+mqJhf6ss6BSfSM671tgKyZBFPTTJM+HLxnhPC3wu0=

|

go.uber.org/goleak v1.1.10 h1:z+mqJhf6ss6BSfSM671tgKyZBFPTTJM+HLxnhPC3wu0=

|

||||||

go.uber.org/goleak v1.1.10/go.mod h1:8a7PlsEVH3e/a/GLqe5IIrQx6GzcnRmZEufDUTk4A7A=

|

go.uber.org/goleak v1.1.10/go.mod h1:8a7PlsEVH3e/a/GLqe5IIrQx6GzcnRmZEufDUTk4A7A=

|

||||||

golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

|

golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

|

||||||

golang.org/x/crypto v0.25.0 h1:ypSNr+bnYL2YhwoMt2zPxHFmbAN1KZs/njMG3hxUp30=

|

golang.org/x/crypto v0.26.0 h1:RrRspgV4mU+YwB4FYnuBoKsUapNIL5cohGAmSH3azsw=

|

||||||

golang.org/x/crypto v0.25.0/go.mod h1:T+wALwcMOSE0kXgUAnPAHqTLW+XHgcELELW8VaDgm/M=

|

golang.org/x/crypto v0.26.0/go.mod h1:GY7jblb9wI+FOo5y8/S2oY4zWP07AkOJ4+jxCqdqn54=

|

||||||

golang.org/x/lint v0.0.0-20190930215403-16217165b5de h1:5hukYrvBGR8/eNkX5mdUezrA6JiaEZDtJb9Ei+1LlBs=

|

golang.org/x/lint v0.0.0-20190930215403-16217165b5de h1:5hukYrvBGR8/eNkX5mdUezrA6JiaEZDtJb9Ei+1LlBs=

|

||||||

golang.org/x/lint v0.0.0-20190930215403-16217165b5de/go.mod h1:6SW0HCj/g11FgYtHlgUYUwCkIfeOF89ocIRzGO/8vkc=

|

golang.org/x/lint v0.0.0-20190930215403-16217165b5de/go.mod h1:6SW0HCj/g11FgYtHlgUYUwCkIfeOF89ocIRzGO/8vkc=

|

||||||

golang.org/x/mod v0.16.0 h1:QX4fJ0Rr5cPQCF7O9lh9Se4pmwfwskqZfq5moyldzic=

|

|

||||||

golang.org/x/mod v0.16.0/go.mod h1:hTbmBsO62+eylJbnUtE2MGJUyE7QWk4xUqPFrRgJ+7c=

|

|

||||||

golang.org/x/mod v0.19.0 h1:fEdghXQSo20giMthA7cd28ZC+jts4amQ3YMXiP5oMQ8=

|

golang.org/x/mod v0.19.0 h1:fEdghXQSo20giMthA7cd28ZC+jts4amQ3YMXiP5oMQ8=

|

||||||

|

golang.org/x/mod v0.19.0/go.mod h1:hTbmBsO62+eylJbnUtE2MGJUyE7QWk4xUqPFrRgJ+7c=

|

||||||

golang.org/x/net v0.0.0-20190311183353-d8887717615a/go.mod h1:t9HGtf8HONx5eT2rtn7q6eTqICYqUVnKs3thJo3Qplg=

|

golang.org/x/net v0.0.0-20190311183353-d8887717615a/go.mod h1:t9HGtf8HONx5eT2rtn7q6eTqICYqUVnKs3thJo3Qplg=

|

||||||

golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||||